Integrate with Jenkins¶

Jenkins is an application that allows you to create and build things at any scale. You can use an instance running on your workstation or an instance running somewhere else.

In the following guide you will update a git repository with its own OpenTestFactory workflow that will run whenever you push changes on your repository.

You need to have access to a repository on a git server, and your Jenkins instance needs to be able to run a job whenever a change occurs on that repository.

You also need to have access to a deployed OpenTestFactory orchestrator instance, with a corresponding token, that is accessible from your Jenkins instance.

You will use the opentf-ctl tool.

Note

The opentf-jenkins-plugin plugin is deprecated. If you are currently using it, it

is recommended to migrate to the process described below.

Preparation¶

You have to install the opentf-ctl tool on your Jenkins agent. This requires

a supported version of Python (version 3.9 or higher) on your agent.

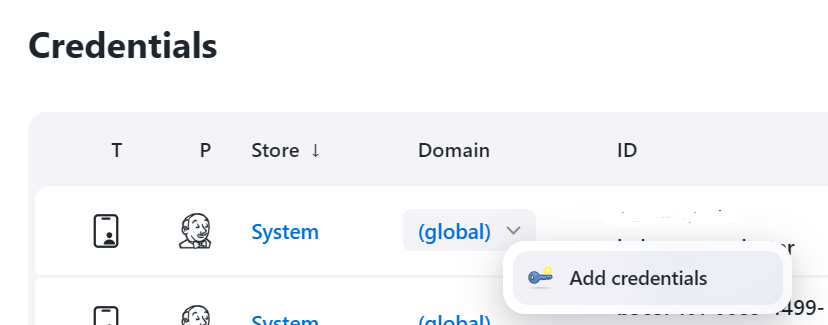

You can define two credentials, a secret text and a secret file. You can define those at your Jenkins instance level, or at your folder level.

If you define them at the instance level, all your jobs will be able to use them. If you define them at the folder level, only the jobs in that folder will be able to use them.

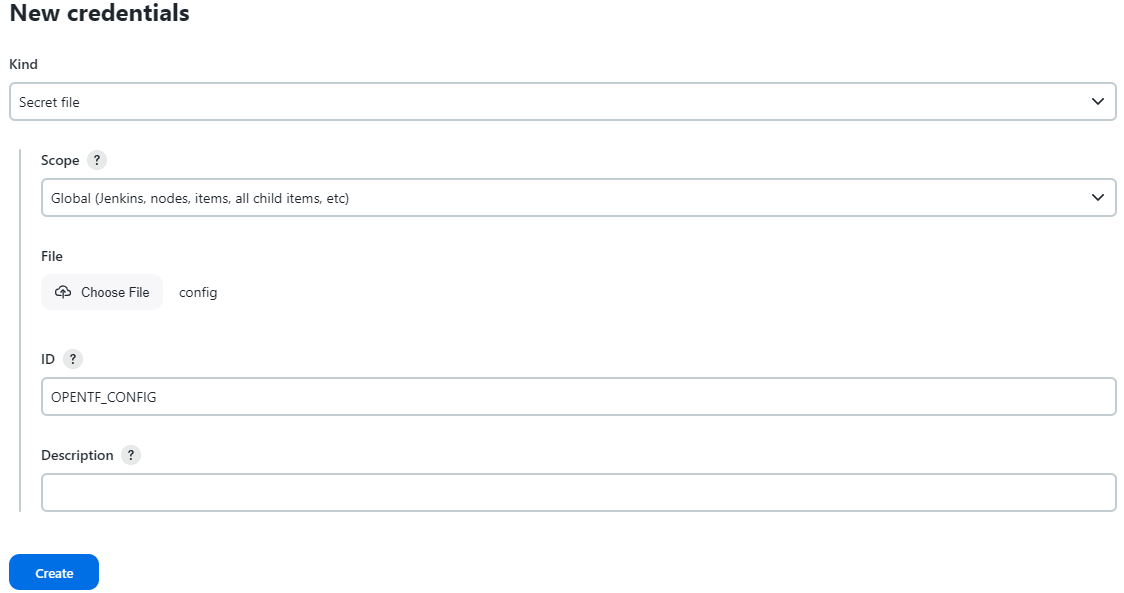

The secret file, often named OPENTF_CONFIG, is typically shared. It references your

OpenTestFactory orchestrator instance.

Depending on your preferences and security requirements, you can share the other credential,

OPENTF_TOKEN, on your instance or folder.

Information

If you do not want to give those credentials those names, you can freely change them,

but then you may have to specify them explicitly in your Jenkinsfile, using the

--opentfconfig= and --token= command line options below.

OPENTF_CONFIG¶

The OPENTF_CONFIG secret file should contain your orchestrator configuration file, that is,

the information needed to reach your orchestrator instance.

Local Deployment Configuration File Template

If you are using a local deployment, on your workstation, as described in Docker compose deployment, it will probably look something like the following:

apiVersion: opentestfactory.org/v1alpha1

contexts:

- context:

orchestrator: my_orchestrator

user: me

name: my_orchestrator

current-context: my_orchestrator

kind: CtlConfig

orchestrators:

- name: my_orchestrator

orchestrator:

insecure-skip-tls-verify: false

services:

receptionist:

port: 7774

agentchannel:

port: 24368

eventbus:

port: 38368

localstore:

port: 34537

insightcollector:

port: 7796

killswitch:

port: 7776

observer:

port: 7775

qualitygate:

port: 12312

server: http://127.0.0.1

users:

- name: me

user:

token: aa

Non-local Deployment Configuration File Template

If you are using a non-local deployment, on your intranet or open to the internet, as described in Kubernetes deployment, it will probably look something like the following.

(In the example below, the HTTP protocol is used. If your deployment is using the HTTPS protocol, which is strongly recommended, replace the port values with 443.)

apiVersion: opentestfactory.org/v1alpha1

kind: CtlConfig

contexts:

- context:

orchestrator: my_orchestrator

user: me

name: my_orchestrator

current-context: my_orchestrator

orchestrators:

- name: my_orchestrator

orchestrator:

insecure-skip-tls-verify: false

services:

receptionist:

port: 80

agentchannel:

port: 80

eventbus:

port: 80

localstore:

port: 80

insightcollector:

port: 80

killswitch:

port: 80

observer:

port: 80

qualitygate:

port: 80

server: http://example.com

users:

- name: me

user:

token: aa

Testing your Configuration File¶

If you have not tested your configuration file before, it is a good idea to test it now.

Testing your Configuration File

On your workstation, install the opentf-tools package, using the following command:

pip install --upgrade opentf-tools

Tip

The above command will install the most recent version of the package. If you want to install a specific version you can use the following command:

pip install opentf-tools==0.42.0

The list of available versions is on PyPI.

Then, copy your configuration in a config file in your current directory, and use the

following command:

opentf-ctl get workflows --opentfconfig=config --token=YOURTOKEN

You should get something like:

WORKFLOWID

6c223f7b-3f79-4c51-b200-68eaa33c1325

31b5e665-819c-4e92-862a-f05d1993c096

Please refer to “Tools configuration” for more information on making your configuration file if needed.

Defining the credential¶

In the Manage Jenkins / Credentials section, add a new credential:

Select the Secret file kind, and upload your OPENTF_CONFIG file:

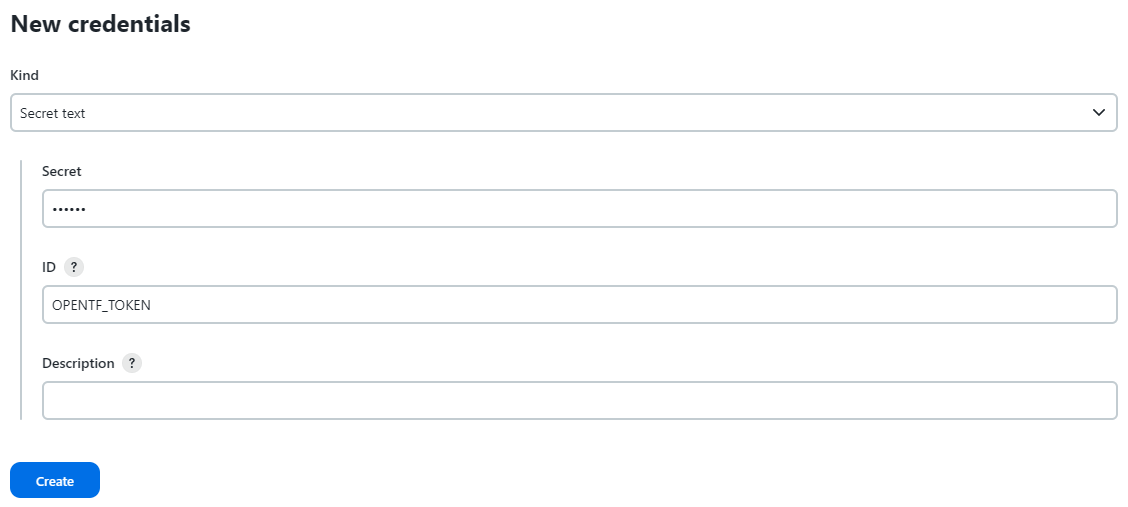

OPENTF_TOKEN¶

The OPENTF_TOKEN secret should contain the token you want to use to communicate

with the orchestrator.

Defining the Variable¶

Select the ‘Secret text’ kind, enter your token, and give it a name:

Integration¶

This section assumes a basic knowledge of Jenkins pipelines. See “Getting started with Pipelines” for more information.

The examples below are written in Groovy, the language used by Jenkins pipelines. They assume

the opentf-ctl configuration is done on your agents.

If you have defined your credentials on your agent and not on your Jenkins instance,

you will have to remove the two OPENTF_ = credentials(...) statements from the

examples:

pipeline {

agent any

environment {

OPENTF_CONFIG = credentials('OPENTF_CONFIG')

OPENTF_TOKEN = credentials('OPENTF_TOKEN')

}

// ...

Running a Workflow¶

If you have a .opentf/workflows/workflow.yaml orchestrator workflow in your project, you can

use the following Jenkinsfile to run it each time you make changes to your project.

pipeline {

agent any

environment {

OPENTF_CONFIG = credentials('OPENTF_CONFIG')

OPENTF_TOKEN = credentials('OPENTF_TOKEN')

}

stages {

stage('Run Workflow') {

steps {

script {

def workflow_command = 'opentf-ctl run workflow .opentf/workflows/workflow.yaml --wait'

sh script: workflow_command, returnStdout: true

}

}

}

}

}

pipeline {

agent any

environment {

OPENTF_CONFIG = credentials('OPENTF_CONFIG')

OPENTF_TOKEN = credentials('OPENTF_TOKEN')

}

stages {

stage('Run Workflow') {

steps {

script {

def workflow_command = 'opentf-ctl run workflow .opentf/workflows/workflow.yaml --wait'

bat script: workflow_command

}

}

}

}

}

Sharing Files and Variables¶

Sometimes, you need to share information —variables or files— for your workflow to work.

For variables, you can do it through the environment statement in your Jenkinsfile file,

prefixing them with OPENTF_RUN_.

For files, you pass them using the -f command line option.

The following example will run a .opentf/workflows/my_workflow_2.yaml workflow, providing it

with a file file (which content will be the one of my_data/file.xml) and two environment

variables FOO and BAR.

pipeline {

agent any

environment {

OPENTF_CONFIG = credentials('OPENTF_CONFIG')

OPENTF_TOKEN = credentials('OPENTF_TOKEN')

OPENTF_RUN_FOO = 12

OPENTF_RUN_BAR = 'https://example.com/bar'

}

stages {

stage('Run Workflow') {

steps {

script {

def workflow_command = 'opentf-ctl run workflow .opentf/workflows/my_workflow_2.yaml -f file=my_data/file.xml --wait'

sh script: workflow_command

}

}

}

}

}

pipeline {

agent any

environment {

OPENTF_CONFIG = credentials('OPENTF_CONFIG')

OPENTF_TOKEN = credentials('OPENTF_TOKEN')

OPENTF_RUN_FOO = 12

OPENTF_RUN_BAR = 'https://example.com/bar'

}

stages {

stage('Run Workflow') {

steps {

script {

def workflow_command = 'opentf-ctl run workflow .opentf/workflows/my_workflow_2.yaml -f file=my_data/file.xml--wait'

bat script: workflow_command

}

}

}

}

}

Applying a Quality Gate to a Completed Workflow¶

Applying a quality gate to a completed workflow is not a mandatory, but a highly recommended step. Without calling the quality gate, the pipeline will be successful regardless of the test results. When the quality gate is applied to a completed workflow and the workflow does not satisfy the quality gate conditions, the quality gate command will exit with a return code of 102, failing the pipeline.

There are two possibilities to integrate the quality gate in the pipeline: to apply it directly

using the run workflow command (available from the opentf-ctl tool 0.45.0 version) or to retrieve

the completed workflow ID first, then to pass it to the get qualitygate command.

The following Jenkinsfile example uses the first approach:

pipeline {

agent any

environment {

OPENTF_CONFIG = credentials('OPENTF_CONFIG')

OPENTF_TOKEN = credentials('OPENTF_TOKEN')

}

stages {

stage('Run Workflow') {

steps {

script {

def workflow_command = 'opentf-ctl run workflow .opentf/workflows/workflow.yaml --mode=my.quality.gate'

sh script: workflow_command

}

}

}

}

}

pipeline {

agent any

environment {

OPENTF_CONFIG = credentials('OPENTF_CONFIG')

OPENTF_TOKEN = credentials('OPENTF_TOKEN')

}

stages {

stage('Run Workflow') {

steps {

script {

def workflow_command = 'opentf-ctl run workflow .opentf/workflows/workflow.yaml --mode=my.quality.gate'

bat script: workflow_command

}

}

}

}

}

When the workflow workflow.yaml is completed, the quality gate my.quality.gate is applied to

the workflow results.

For the second approach, you need to retrieve the workflow ID first, then apply the quality gate to the workflow:

pipeline {

agent any

environment {

WORKFLOW_ID = ''

}

environment {

OPENTF_CONFIG = credentials('OPENTF_CONFIG')

OPENTF_TOKEN = credentials('OPENTF_TOKEN')

}

stages {

stage('Run Workflow') {

steps {

script {

// start workflow

def workflow_command = 'opentf-ctl run workflow .opentf/workflows/workflow.yaml'

def workflow_result = sh(script: workflow_command, returnStdout: true).trim()

echo "Workflow result: ${workflow_result}"

def workflow_id_match = (workflow_result =~ /Workflow ([\w-]+)/)

if (workflow_id_match) {

WORKFLOW_ID = workflow_id_match[0][1].toString()

echo "Extracted WORKFLOW_ID: ${WORKFLOW_ID}"

} else {

error("Could not extract WORKFLOW_ID: ${workflow_result}")

}

// wait for workflow completion

def watch_command = "opentf-ctl get workflow ${WORKFLOW_ID} --watch"

sh script: watch_command

}

}

}

stage('Apply Quality Gate') {

steps {

script {

def qg_command = "opentf-ctl get qualitygate ${WORKFLOW_ID} --mode=my.quality.gate"

def qg_result = sh(script: qg_command, returnStdout: true).trim()

echo "Quality Gate result: ${qg_result}"

}

}

}

}

}

pipeline {

agent any

environment {

OPENTF_CONFIG = credentials('OPENTF_CONFIG')

OPENTF_TOKEN = credentials('OPENTF_TOKEN')

WORKFLOW_ID = ''

}

stages {

stage('Run Workflow') {

steps {

script {

// start workflow

def workflow_command = 'opentf-ctl run workflow .opentf/workflows/workflow.yaml'

def workflow_result = bat(returnStdout: true, script: workflow_command).trim()

echo "Workflow result: ${workflow_result}"

def workflow_id_match = (workflow_result =~ /Workflow ([\w-]+)/)

if (workflow_id_match) {

WORKFLOW_ID = workflow_id_match[0][1].toString()

echo "Extracted WORKFLOW_ID: ${WORKFLOW_ID}"

} else {

error("Could not extract WORKFLOW_ID: ${workflow_result}")

}

// wait for workflow completion

def watch_command = "opentf-ctl get workflow ${WORKFLOW_ID} --watch"

bat script: watch_command

}

}

}

stage('Apply Quality Gate') {

steps {

script {

def qg_command = "opentf-ctl get qualitygate ${WORKFLOW_ID} --mode=my.quality.gate"

def qg_result = bat(returnStdout: true, script: qg_command).trim()

echo "Quality Gate result: ${qg_result}"

}

}

}

}

}

Attaching Reports¶

Running a workflow produces an execution log in the console. You can attach the HTML report to the build result by copying it to the workspace.

The default HTML report is named executionreport.html. You can adjust the example

below to match your actual report name.

pipeline {

agent any

environment {

OPENTF_CONFIG = credentials('OPENTF_CONFIG')

OPENTF_TOKEN = credentials('OPENTF_TOKEN')

OPENTF_RUN_Navigateur = 'firefox'

WORKFLOW_ID = ''

}

stages {

stage('Run Workflow') {

steps {

script {

// start the workflow and capture output

def workflow_command = 'opentf-ctl run workflow .opentf/workflows/workflow.yaml'

def workflow_result = sh(returnStdout: true, script: workflow_command).trim()

def workflow_id_match = (workflow_result =~ /Workflow ([\w-]+)/)

if (workflow_id_match) {

WORKFLOW_ID = workflow_id_match[0][1].toString()

echo "WORKFLOW_ID: ${WORKFLOW_ID}"

} else {

error("Could not extract WORKFLOW_ID: ${workflow_result}")

}

def watch_command = "opentf-ctl get workflow ${WORKFLOW_ID} --watch"

sh script: watch_command

}

}

}

stage('Prepare Workspace') {

steps {

script {

sh 'rm -f executionreport.html'

}

}

}

stage('Get Attachments') {

steps {

script {

sleep(time: 20, unit: 'SECONDS') // Ensure execution report is ready

def attach_html = "opentf-ctl cp ${WORKFLOW_ID}:*.html ."

sh script: attach_html

}

}

}

stage('Publish HTML Report') {

steps {

publishHTML(target: [

allowMissing: false,

alwaysLinkToLastBuild: true,

keepAll: true,

reportDir: '.',

reportFiles: 'executionreport.html',

reportName: 'Test Report',

reportTitles: 'Execution report'

])

}

}

}

}

pipeline {

agent any

environment {

OPENTF_CONFIG = credentials('OPENTF_CONFIG')

OPENTF_TOKEN = credentials('OPENTF_TOKEN')

OPENTF_RUN_Navigateur = 'firefox'

WORKFLOW_ID = ''

}

stages {

stage('Run Workflow') {

steps {

script {

// start the workflow and capture output

def workflow_command = 'opentf-ctl run workflow .opentf/workflows/workflow.yaml'

def workflow_result = bat(returnStdout: true, script: workflow_command).trim()

def workflow_id_match = (workflow_result =~ /Workflow ([\w-]+)/)

if (workflow_id_match) {

WORKFLOW_ID = workflow_id_match[0][1].toString()

echo "WORKFLOW_ID: ${WORKFLOW_ID}"

} else {

error("Could not extract WORKFLOW_ID: ${workflow_result}")

}

def watch_command = "opentf-ctl get workflow ${WORKFLOW_ID} --watch"

bat script: watch_command

}

}

}

stage('Prepare Workspace') {

steps {

script {

bat 'if exist executionreport.html del executionreport.html'

}

}

}

stage('Get Attachments') {

steps {

script {

sleep(time: 20, unit: 'SECONDS') // Ensure execution report is ready

def attach_html = "opentf-ctl cp ${WORKFLOW_ID}:*.html ."

bat script: attach_html

}

}

}

stage('Publish HTML Report') {

steps {

publishHTML(target: [

allowMissing: false,

alwaysLinkToLastBuild: true,

keepAll: true,

reportDir: '.',

reportFiles: 'executionreport.html',

reportName: 'Test Report',

reportTitles: 'Execution report'

])

}

}

}

}

Recapitulation Example (Windows Agent)¶

pipeline {

agent any

environment {

OPENTF_CONFIG = credentials('OPENTF_CONFIG')

OPENTF_TOKEN = credentials('OPENTF_TOKEN')

OPENTF_RUN_Navigateur = 'firefox'

WORKFLOW_ID = ''

}

stages {

stage('Run Workflow') {

steps {

script {

def workflow_command = 'opentf-ctl run workflow .opentf/workflows/workflow.yaml --wait'

def workflow_result = bat(returnStdout: true, script: workflow_command).trim()

echo "Workflow result: ${workflow_result}"

def workflow_id_match = (workflow_result =~ /Workflow ([\w-]+)/)

if (workflow_id_match) {

WORKFLOW_ID = workflow_id_match[0][1].toString()

echo "WORKFLOW_ID: ${WORKFLOW_ID}"

} else {

error("Could not extract WORKFLOW_ID: ${workflow_result}")

}

}

}

}

stage('Wait for workflow to finish') {

steps {

script {

def watch_command = "opentf-ctl get workflow ${WORKFLOW_ID} --watch"

bat script: watch_command

}

}

}

stage('Prepare Workspace') {

steps {

script {

bat 'if exist executionreport.html del executionreport.html'

}

}

}

stage('Get Attachments') {

steps {

script {

sleep(time: 20, unit: 'SECONDS') // Ensure execution report is ready

def attach_html = "opentf-ctl cp ${WORKFLOW_ID}:*.html ."

bat script: attach_html

}

}

}

stage('Publish HTML Report') {

steps {

publishHTML(target: [

allowMissing: false,

alwaysLinkToLastBuild: true,

keepAll: true,

reportDir: '.',

reportFiles: 'executionreport.html',

reportName: 'Test Report',

reportTitles: 'Execution Report'

])

}

}

stage('Apply Quality Gate') {

steps {

script {

def qg_command = "opentf-ctl get qualitygate ${WORKFLOW_ID} --mode strict"

def qg_result = bat(returnStdout: true, script: qg_command).trim()

echo "Quality Gate: ${qg_result}"

}

}

}

}

}

Migration Away from the Legacy plugin¶

If you were using the opentf-jenkins-plugin plugin, you can migrate to the opentf-ctl tool

by following the steps below.

Configuration¶

You need to define the OPENTF_CONFIG and OPENTF_TOKEN credentials as described above.

If you were using multiple orchestrator configurations or tokens, you will have to specify

the corresponding ones in your OPENTF_CONFIG = credentials() and OPENTF_TOKEN = credentials()

statements.

Integration¶

You will have to update your environment variable names. The deprecated plugin was using

the OPENTF_ prefix and the new tool is using the OPENTF_RUN_ prefix.

You will also have to convert your runOTFWorkflow steps to the bat or sh ones as

described above.

Legacy plugin¶

Warning

Please note that the opentf-jenkins-plugin plugin is deprecated. It does not support

the latest orchestrator features, starting away from the 2024-11 release.

Preparation¶

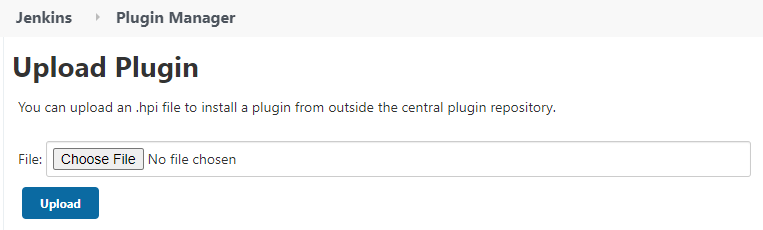

You need to install and configure the opentf-jenkins-plugin plugin. It is compatible with Jenkins version 2.164.1 or higher.

Installation¶

Upload the opentestfactory-orchestrator.hpi file in the Upload Plugin area accessible

by the Advanced tab of the Plugin Manager in Jenkins configuration:

Configuration¶

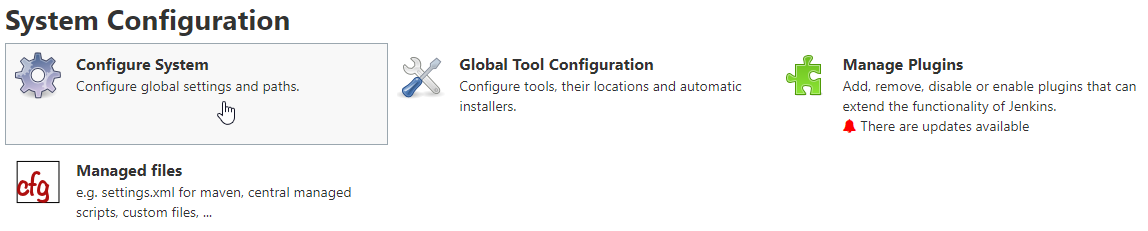

Go the Configure System page accessible in the System Configuration space of Jenkins, through the Manage Jenkins tab:

A block named OpenTestFactoryOrchestrator servers will then be available:

Server id-

This ID is automatically generated and cannot be modified.

Server name-

Any name you like. You will use it in your pipelines to identify the orchestrator you want to use for your workflows.

Receptionist endpoint URL-

The address of the receptionist service of the orchestrator, with its port as defined for the orchestrator instance.

Workflow Status endpoint URL-

The address of the observer service of the orchestrator, with its port as defined for the orchestrator instance.

Credential-

A Jenkins credential of type Secret text, containing a JWT Token allowing authentication to the orchestrator.

Workflow Status poll interval-

This parameter sets the interval between each update of the workflow status. Checking once every 5 seconds is enough, so setting this to

5Sis fine. (The trailingSis the unit, seconds here. You can useHfor hours andMfor minutes. The case is important.) Workflow creation timeout-

Timeout used to wait for the workflow status to be available on the observer after reception by the receptionist. A 30 seconds grace period usually do, so setting this to

30Swill do. (The trailingSis the unit, seconds here. You can useHfor hours andMfor minutes. The case is important.)

You can define multiple orchestrator instances if you interact with multiple orchestrators.

Local deployment¶

If you are using a local deployment, on your workstation, as for example described in Docker compose deployment, the endpoints will probably look something like the following:

Server name:orchestratorReceptionist endpoint URL:http://127.0.0.1:7774Workflow status endpoint URL:http://127.0.0.1:7775Workflow Status poll interval:5SWorkflow creation timeout:30S

Do not forget to add or specify a credential for your orchestrator token.

Non-local deployment¶

If you are using a non-local deployment, on your intranet or open to the internet, as for example described in Kubernetes deployment, it will probably looks something like the following.

(In the example below, the HTTP protocol is used. If your deployment is using the HTTPS protocol, which is strongly recommended, define the port values as 443.)

Server name:orchestratorReceptionist endpoint URL:http://example.comWorkflow status endpoint URL:http://example.comWorkflow Status poll interval:5SWorkflow creation timeout:30S

Do not forget to add or specify a credential for your orchestrator token.

Integration¶

Now that the plugin is configured, you are ready to integrate your OpenTestFactory orchestrator within your Jenkins CI.

This section assumes a basic knowledge of Jenkins pipelines. See “Getting started with Pipelines” for more information.

Note

There is no required location for your project’s workflows, but it is a good

practice to put them in a single location. .opentf/workflows is a good candidate.

Running a workflow¶

If you have a .opentf/workflows/workflow.yaml orchestrator workflow in your repository, you can

use the following Jenkinsfile file to run it each time you make changes to your

project.

pipeline {

agent any

stages {

stage('Sanity check') {

echo 'OK pipelines work in the test instance'

}

stage('QA') {

runOTFWorkflow(

workflowPathName: '.opentf/workflows/workflow.yaml',

workflowTimeout: '200S',

serverName: 'orchestrator',

jobDepth: 2,

stepDepth: 3,

dumpOnError: true

)

}

}

}

The runOTFWorkflow method allows the transmission of a PEaC to the orchestrator for an execution.

It uses 6 parameters:

workflowPathName-

The path to the file containing the PEaC on your SCM.

workflowTimeout-

Timeout on workflow execution. This timeout will trigger if workflow execution takes longer than expected, for any reason. This aims to end stalled workflows (for example due to unreachable environments or unsupported function calls). It is to be adapted depending on the expected duration of the execution of the various tests in the PEaC.

serverName-

Name of the OpenTestFactory Orchestrator server to use. This name is defined in the OpenTestFactory Orchestrator servers space of the Jenkins configuration.

jobDepth-

Display depth of nested jobs logs in Jenkins output console. This parameter is optional. The default value is 1.

stepDepth-

Display depth of nested steps logs in Jenkins output console. This parameter is optional. The default value is 1.

dumpOnError-

If true, reprint all logs with maximum job depth and step depth when a workflow fails. This parameter is optional. The default value is true.

Information

To facilitate log reading and debugging, logs from test execution environments are always displayed, regardless of the depth of the logs requested.

Sharing files and variables¶

Sometimes, you need to share information—variables or files—for your workflow to work.

For variables, you can do it through the environment statement in your Jenkinsfile

file, prefixing them with OPENTF_.

For files, you pass them using the fileResources command parameter.

The following example will run a .opentf/workflows/my_workflow_2.yaml workflow, providing it

with a file file (which content will be the one of my_data/file.xml) and two environment variables: FOO and TARGET.

pipeline {

agent any

environment {

OPENTF_FOO = 12

OPENTF_TARGET = 'https://example.com/target'

}

stages {

stage('Greetings'){

steps {

echo 'Hello!'

}

}

stage('QA') {

steps {

runOTFWorkflow(

workflowPathName: '.opentf/workflows/my_workflow_2.yaml',

workflowTimeout: '200S',

serverName: 'orchestrator',

jobDepth: 2,

stepDepth: 3,

dumpOnError: true,

fileResources: [ref('name': 'file', 'path': 'my_data/file.xml')]

)

}

}

}

}